ESXi Loses Connectivity to VMFS Datastores.

Introduction

This post explains my recent experiences with certain hosts losing access to some of our VMFS datastores. Hopefully it will help someone avoid the pain that I have been through in diagnosing this issues!

The Symptoms

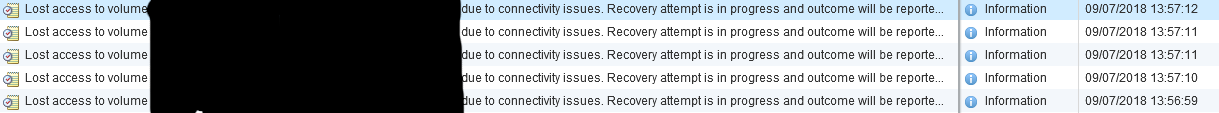

I was initially made aware of this issue as it appeared that certain hosts were losing connectivity to VMFS datastores. Upon investigation I found the following events being logged very frequently against multiple hosts.

Lost access to volume <GUID> (DS1234) due to connectivity issues. Recovery attempt is in progress and outcome will be reported...

These events can be seen in the Web UI but I also ran the following command to monitor the hostd.log file which showed, in real-time, a constant disconnect and reconnect loop.

tail -f /var/log/hostd.log | grep "'Vimsvc.ha-eventmgr'"

Troubleshooting

These errors initially led me to believe that the issue was related to fibre channel connectivity of this datastore, so I had the storage team take a look. After much investigation the storage team assured me that they could not see any issues with the LUNs presented to these hosts.

I then found the following errors logged in the vmkernel.log of the affected hosts.

2018-07-10T01:10:19.044Z cpu38:32861)ScsiDeviceIO: 2652: Cmd(0x43be1974bb80) 0x89, CmdSN 0x1aa23 from world 32814 to dev "naa.60050768010000002000000000012345" failed H:0x5 D:0x0 P:0x0 Possible sense data: 0x0 0x0 0x0.

2018-07-10T01:10:20.033Z cpu30:32853)ScsiDeviceIO: 2595: Cmd(0x43be1af22980) 0x2a, CmdSN 0x8000002a from world 38961 to dev "naa.60050768010000002000000000012345" failed H:0x8 D:0x0 P:0x0

2018-07-10T01:10:20.033Z cpu30:32853)ScsiDeviceIO: 2595: Cmd(0x43be15d21700) 0x2a, CmdSN 0xfffffa800210a940 from world 40502 to dev "naa.60050768010000002000000000012345" failed H:0x8 D:0x0 P:0x0

2018-07-10T01:10:20.033Z cpu30:32853)ScsiDeviceIO: 2595: Cmd(0x43be18fa5dc0) 0x2a, CmdSN 0xfffffa80020a2590 from world 40502 to dev "naa.60050768010000002000000000012345" failed H:0x8 D:0x0 P:0x0

2018-07-10T01:10:20.033Z cpu30:32853)ScsiDeviceIO: 2595: Cmd(0x43be197633c0) 0x2a, CmdSN 0x80000056 from world 38961 to dev "naa.60050768010000002000000000012345" failed H:0x8 D:0x0 P:0x0

2018-07-10T01:10:20.033Z cpu30:32853)ScsiDeviceIO: 2595: Cmd(0x43be15f6d780) 0x2a, CmdSN 0xfffffa8002110f80 from world 40502 to dev "naa.60050768010000002000000000012345" failed H:0x8 D:0x0 P:0x0

2018-07-10T01:10:22.374Z cpu20:33600)NMP: nmp_ThrottleLogForDevice:3333: Cmd 0x16 (0x43be1b76a340, 0) to dev "naa.60050768010000002000000000012345" on path "vmhba0:C0:T4:L2" Failed: H:0x0 D:0x2 P:0x0 Valid sense data: 0x6 0x29 0x0. Act:NONE

2018-07-10T01:10:22.374Z cpu20:33600)ScsiDeviceIO: 2613: Cmd(0x43be1b76a340) 0x16, CmdSN 0xfb0 from world 0 to dev "naa.60050768010000002000000000012345" failed H:0x0 D:0x2 P:0x0 Valid sense data: 0x6 0x29 0x0.

2018-07-10T01:10:22.374Z cpu20:33600)ScsiCore: 1609: Power-on Reset occurred on naa.60050768010000002000000000012345

2018-07-10T01:10:22.388Z cpu26:32873)HBX: 283: 'DS1234': HB at offset 4075520 - Reclaimed heartbeat [Timeout]:

2018-07-10T01:10:22.390Z cpu26:32873)FS3Misc: 1759: Long VMFS rsv time on 'DS1234' (held for 2259 msecs). # R: 1, # W: 1 bytesXfer: 2 sectors

2018-07-10T01:10:27.499Z cpu32:33604)NMP: nmp_ThrottleLogForDevice:3333: Cmd 0x2a (0x43be1b769a40, 38961) to dev "naa.60050768010000002000000000012345" on path "vmhba1:C0:T4:L2" Failed: H:0x0 D:0x2 P:0x0 Valid sense data: 0x6 0x29 0x0. Act:NONE

2018-07-10T01:10:27.499Z cpu32:33604)ScsiDeviceIO: 2613: Cmd(0x43be1b769a40) 0x2a, CmdSN 0x8000005e from world 38961 to dev "naa.60050768010000002000000000012345" failed H:0x0 D:0x2 P:0x0 Valid sense data: 0x6 0x29 0x0.

2018-07-10T01:10:27.499Z cpu32:33604)ScsiCore: 1609: Power-on Reset occurred on naa.60050768010000002000000000012345

2018-07-10T01:10:35.036Z cpu25:32848)ScsiDeviceIO: 2595: Cmd(0x43be15960ac0) 0x2a, CmdSN 0x8000000f from world 38961 to dev "naa.60050768010000002000000000012345" failed H:0x8 D:0x0 P:0x0

2018-07-10T01:10:35.036Z cpu25:32848)ScsiDeviceIO: 2595: Cmd(0x43be197f48c0) 0x2a, CmdSN 0xfffffa8002110f80 from world 40502 to dev "naa.60050768010000002000000000012345" failed H:0x8 D:0x0 P:0x0

2018-07-10T01:10:35.036Z cpu25:32848)ScsiDeviceIO: 2595: Cmd(0x43be19c300c0) 0x2a, CmdSN 0xfffffa8002089940 from world 40502 to dev "naa.60050768010000002000000000012345" failed H:0x8 D:0x0 P:0x0

2018-07-10T01:10:35.036Z cpu25:32848)ScsiDeviceIO: 2595: Cmd(0x43be1b4f6340) 0x2a, CmdSN 0x8000007d from world 38961 to dev "naa.60050768010000002000000000012345" failed H:0x8 D:0x0 P:0x0

2018-07-10T01:10:35.036Z cpu25:32848)ScsiDeviceIO: 2595: Cmd(0x43be1b75a300) 0x2a, CmdSN 0x1aa2c from world 32814 to dev "naa.60050768010000002000000000012345" failed H:0x8 D:0x0 P:0x0

2018-07-10T01:10:37.844Z cpu21:32874)HBX: 283: 'DS1234': HB at offset 4075520 - Reclaimed heartbeat [Timeout]:

2018-07-10T01:10:37.844Z cpu21:32874) [HB state abcdef02 offset 4075520 gen 29 stampUS 3975149635 uuid 5b43f84b-2341c5c8-32b6-90e2baf4630c jrnl <FB 80402> drv 14.61 lockImpl 3]

2018-07-10T01:10:37.847Z cpu21:32874)FS3Misc: 1759: Long VMFS rsv time on 'DS1234' (held for 2714 msecs). # R: 1, # W: 1 bytesXfer: 2 sectors

2018-07-10T01:12:12.584Z cpu8:38859)etherswitch: L2Sec_EnforcePortCompliance:152: client APP1421.eth0 requested promiscuous mode on port 0x6000006, disallowed by vswitch policy

2018-07-10T01:12:12.584Z cpu8:38859)etherswitch: L2Sec_EnforcePortCompliance:152: client APP1421.eth0 requested promiscuous mode on port 0x6000006, disallowed by vswitch policy

2018-07-10T01:24:08.185Z cpu39:35449 opID=9b64b9f9)World: 15554: VC opID 8de99fb1-d856-4a71-ab08-5501dfffc500-7011-ngc-d5-67-78e8 maps to vmkernel opID 9b64b9f9

2018-07-10T01:24:08.185Z cpu39:35449 opID=9b64b9f9)DLX: 3876: vol 'DS1234', lock at 63432704: [Req mode 1] Checking liveness:

This shows that in fact there were file locks on these datastores which appeared to be causing the I/O heartbeat to time out. At this stage, given that it was 2 am and I wanted to go to sleep!, I decided to do a rolling reboot of every host in this cluster which cleared all of the file locks and restored access to this datastore. Yay!

Root Cause Analysis

While I had managed to restore access to this datastore, I was still seeing random occurrences of the previously mentioned file lock error messages. After a bit of digging around I found this article from vmware https://kb.vmware.com/s/article/2113956 which states the following:

##Symptoms An ESXi 5.5 Update 2 or ESXi 6.0 host loses connectivity to a VMFS5 datastore, while using the VAAI ATS heartbeat in your environment.

Cause

A change in the VMFS heartbeat update method was introduced in ESXi 5.5 Update 2, to help optimize the VMFS heartbeat process. Whereas the legacy method involves plain SCSI reads and writes with the VMware ESXi kernel handling validation, the new method offloads the validation step to the storage system. This is similar to other VAAI-related offloads.

This optimization results in a significant increase in the volume of ATS commands the ESXi kernel issues to the storage system and resulting increased load on the storage system. Under certain circumstances, VMFS heartbeat using ATS may fail with false ATS miscompare which causes the ESXi kernel to again verify its access to VMFS datastores. This leads to the Lost access to datastore messages.

Also in the article it explains that in order to disable ATS heartbeat, and instead revert to plain SCSI reads and writes, you can set the VMFS3.UseATSForHBOnVMFS5 advanced setting on your hosts to 0. However, it does state the following:

To resolve this issue, revert the heartbeat to non-ATS mechanisms by disabling ATS heartbeat on ALL hosts sharing the datastore where these errors are seen.

As I am reletavily new to this company (and as I’m sure you’ll appreciate there are many configurations in the environment that were made before my time) I decided to take a look at the affected hosts to see if someone has set this advanced setting previously. This is where I found the actual root cause.

We are currently going through a host refresh for this particular cluster and while the old hosts profile did in fact have ATS heartbeat disabled, all of the new hosts had been given a new profile which did NOT have ATS heartbeat disabled. Apparently, this is because the version of IBM Storwise that we were previously running did not support using ATS for the VMFS heartbeat (see http://www-01.ibm.com/support/docview.wss?uid=ssg1S1005201).

As it happened, the old hosts were all sat in maintenance mode ready to be decommissioned, so I just expedited this process and unmounted the datastores from them. This obvisouly means that all of our new hosts are now using ATS heartbeat for VMFS datastores and the issue has been resolved.